Automate Exploratory Data Analysis

12 May 2017Exploratory Data Analysis (EDA) is one of the first workflows when starting out a machine learning project. Throwing in a bunch of plots at a dataset is not difficult. What is much more useful is to derive insights, metrics, and observations based on the state of the datasets, guiding next stages in our ML workflow.

The 0.9.2 release of Speedml focuses on productivity improvements during exploratory data analysis workflow.

Can the datasets tell us which plots to use to detect outliers, which features to engineer next, which model algorithms to use, or how to cleanse our datasets? This release of Speedml starts answering some of these questions for you.

We also address Python 2.x / 3.x compatibility issues and current bug fixes.

Speed EDA

Once you have imported Speedml and initialized the datasets, you can run the eda method to speed EDA your datasets.

from speedml import Speedml

sml = Speedml('../input/titanic/train.csv',

'../input/titanic/test.csv',

target='Survived', uid='PassengerId')

sml.eda()

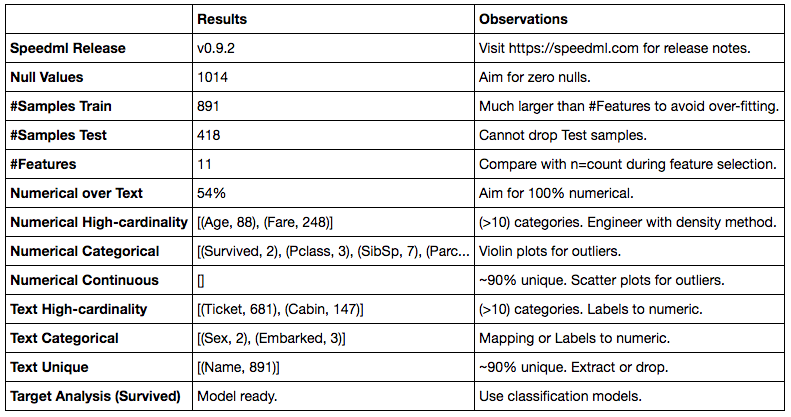

The resulting dataframe automatically provides insights and observations based on current state of your datasets. Observations include suitable plots to use, pre-processing methods to apply, and ideal results to achieve for model readiness. We even include model recommendations (regression or classification) based on the target variable analysis.

As you progress in the pre-processing workflow you can run the method again and it will tailor the result according to latest state. Once you convert all text columns to numerical, the text features related rows will disappear. Think of this method as a dashboard of progress throughout your workflow.

You can query any of the results like so.

sml.eda().get_value('Numerical Categorical', 'Results')

This will output the complete text or list value of the results column for the queried metric.

New plots

We are adding a couple of new plots in this release.

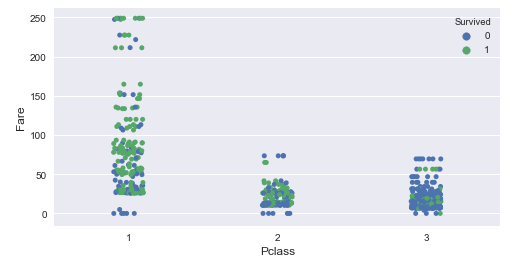

Using Strip plots we can plot categorical feature against a continuous feature across the target variable.

sml.plot.strip('Pclass', 'Fare')

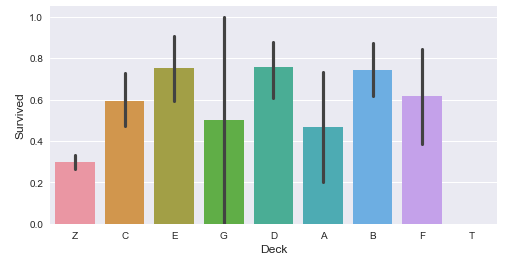

We can plot categorical variables against target variable using a simple bar graph.

sml.plot.bar('Deck', 'Survived')

Plot this right next to a crosstab table using plot.crosstab method to gain a text based and visual view of the same data.

Automatic notebook configuration

init.py Revert to using absolute/relative paths as passed into public API. Use Base.outpath for internal file output (when storing Xgb feature map file).

Now you do not need to specify %matplotlib inline magic command in the notebook using Speedml. We configure the notebook automatically.

Capability to output more than one results from one input cell. Nice to have when using single line method calls returning single line results. Specially when these methods can group as one seamless workflow like so.

sml.feature.extract(new='Title', a='Name', regex=' ([A-Za-z]+)\.')

sml.plot.crosstab('Title', 'Sex')

sml.feature.replace(a='Title', match=['Lady', 'Countess','Capt', 'Col',\

'Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], new='Rare')

sml.feature.replace('Title', 'Mlle', 'Miss')

sml.feature.replace('Title', 'Ms', 'Miss')

sml.feature.replace('Title', 'Mme', 'Mrs')

sml.train[['Name', 'Title']].head()

This code in one input cell will now output a series of dataframes and string messages based on output from each of the methods.

Python 3 and 2 compatible support

base.py Added @staticmethod decorator for data_n() method to address #8

setup.py Added dependency for future package to support Python 3/2 compatible code.

setup.py Updated open method without encoding param for Py27 compatibility.

From this release we are adding release testing and feature exploration notebooks for Python 3.x and Python 2.x kernels. You can view and download these from GitHub for 0.9.2 release running for Python 2.x or 3.x kernels.

Saving performance parameters

We can now save metrics from model performance methods as a file slug as part of our submission. This enables us to record and order submissions by changes to these metrics.

sml.save_results(

columns={ 'PassengerId': sml.uid,

'Survived': sml.xgb.predictions },

file_path='output/titanic-speedml-{}.csv'.format(sml.slug()))

This results in file names like these.

titanic-speedml-e16.63-m83.33-s86.53-f78.73.csv

Here the CV error is e=0.1663. Model accuracy relative to other models is m=0.8333. Accuracy of number of samples predicted is s=86.45%. The feature selection accuracy score is f=78.73%.